Setting up a MariaDB Galera cluster in Centos 6

Synopsis

One of the big draw cards of MariaDB over MySQL is open source support for clustering. While the open source version of MySQL allows for replication amongst a number of read only nodes, MariaDB has true multi-master replication.If you have read the documentation at https://kb.askmonty.org/en/getting-started-with-mariadb-galera-cluster/, you could be forgiven for believing that setting up a cluster is a trivial process. Unfortunately those instructions leave out a few vital details that will prevent your cluster from working. These are the steps I used to get a MariaDB Galera Cluster running in Centos 6.

Configure the YUM repo

Go to https://downloads.mariadb.org/mariadb/repositories/ and select Centos as the OS, and 5.5 as the version. Galera (the clustering component of MariaDB) is only available with version 5.5 at this time.

I have the 64 bit version of Centos, so the resulting file looks like this:

# MariaDB 5.5 CentOS repository list - created 2013-07-03 04:40 UTC

# http://mariadb.org/mariadb/repositories/

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/5.5/centos6-amd64

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

The file for the 32 bit version of Centos looks like:

# MariaDB 5.5 CentOS repository list - created 2013-07-03 04:46 UTC

# http://mariadb.org/mariadb/repositories/

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/5.5/centos6-x86

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

You'll want to save this file into /etc/yum.repo.d/MariaDB.repo.

Install MariaDB

MariaDB is a drop in replacement for MySQL. If you have installed the desktop packages for Centos, chances are you have MySQL installed already. To uninstall it, run the following command:

yum erase *mysql*

Installing MariaDB Galera is done with the following command:

yum install MariaDB-Galera-server MariaDB-client setroubleshoot

You will notice that we are also installing the SELinux Troubleshooter. This will come in handy later.

Initial Database Configuraion

We need to start the MariaDB service. Because it is a drop in replacement for MySQL, you use the same command to start the server as you would to start MySQL.

service mysql start

Upgrading the databases fixes an issue where MySQL Workbench will simply say "fetching..." and will never list the tables available in the database.

mysql_upgrade

It is good practice to secure the new installation. MariaDB provides a script that will run you through the process.

mysql_secure_installation

We also need to setup some passwords. To do that we load the mysql command line client, and log into our database.

mysql -hlocalhost -uroot

The following commands will set passwords on the root account, both when accessed locally, and when accessed from a remote system. Obviously you can change the password in these commands to something a little more secure, but for this tutorial we'll use 'password'.

GRANT ALL PRIVILEGES ON *.* TO 'root'@'localhost' IDENTIFIED BY 'password' WITH GRANT OPTION;

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'password' WITH GRANT OPTION;

FLUSH PRIVILEGES;

exit

The last step is to shutdown MariaDB

service mysql stop

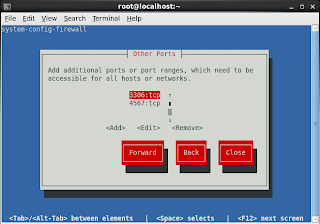

Open the network ports

A MariaDB cluster requires ports 3306 and 4567 to be open. If you use rsync to do the state transfers, you'll also need port 4444 open. You can do by running the command:

system-config-firewall-tui

In the other ports section, you need to open up 3306, 4444 and 4567, all with the tcp protocol.

Fix SELinux Issues

The quick way to fix this is to disable SELinux with the command:

setenforce 0

If you want to leave SELinux enabled, you'll need to restart Centos to allow the SELinux Troubleshooter we installed earlier to pick up on any violations.

When you attempt to run MariaDB in the steps below, SELinux Troubleshooter will report three errors.

Clicking the Details button on each of these alerts provides the commands you need to run to allow MariaDB to run with SELinux enabled.

Start the cluster

MariaDB requires 3 nodes to effectively provide fault tolerance. We'll get two running, but the process is the same for any additional nodes.

Start the first server with the command

mysqld --wsrep_cluster_address=gcomm:// --user=mysql --wsrep_provider=/usr/lib64/galera/libgalera_smm.so --wsrep_sst_auth=root:password

The --wsrep_cluster_address=gcomm:// option defines this as the initial node in the cluster.

The --user=mysql option tells MariaDB to start under the mysql user

The --wsrep_provider=/usr/lib64/galera/libgalera_smm.so option tells MariaDB where the Galera library is.

The --wsrep_sst_auth=root:password option provides the credentials that MariaDB requires when syncing the initial state of the cluster to newly joined nodes. Change the password to reflect the password you set earlier.

Remember that if you have SELinux enabled, it is at this point that the SELinux Troubleshooter should be notifying you of issues. If not, make sure you have restarted Centos to allow the troubleshooter to catch violations.

Joining the cluster

With the first node up, run this command on the second node to join it:

mysqld --wsrep_cluster_address=gcomm://192.168.0.1 --user=mysql --wsrep_provider=/usr/lib64/galera/libgalera_smm.so --wsrep_sst_auth=root:password

The only change here is the --wsrep_cluster_address=gcomm://192.168.0.1 option. We have assumed that the first node has an IP address of 192.168.0.1, and have requested that this second node connect to the first node.

NOTE

One way to avoid editing my.cnf in order to remove gcomm:// is to start all cluster nodes with the following URL: gcomm://node1,node2:port2,node3?pc.wait_prim=no&...

(note the 'pc.wait_prim=no' option which makes the node to wait for primary component indefinitely.) Then bootstrap the primary component by running SET GLOBAL wsrep_provider_options="pc.bootstrap=1"; on any one of the nodes.

Testing the cluster

At this point you should be able to make changes to the database tables on one server and see them reflected on the second server. Your cluster is now up and running!

Making the changes permanent

All the configuration has been defined in command line options, which means that if one of the servers went down, it would not reconnect to the cluster.

To save the settings we supplied on the command line, edit the /etc/my.cnf file, and add the following lines to the end of it. This configuration allows the first node to reconnect to the second.

[mariadb]

wsrep_cluster_address=gcomm://192.168.0.2

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_sst_auth=root:password

wsrep_node_address=192.168.0.1

log-error=/var/log/mysql.log

The wsrep_node_address option defines the IP address of the local host. This is optional, but when I ran MariaDB on my VM, it reported that it could not automatically determine the IP address, which is why it is hard coded in this option.

The log-error option defines the log file that MariaDB will write to.

All the other options are exactly the same as what was supplied on the command line.

With this configuration in place you can kill the mysql instance that was started from the command line. The second node is still up, and so the cluster is still up. The first node can then be restarted with the command:

service mysql start

This will start MariaDB and reconnect it to the second node, and thus to the cluster.

We can then follow the same process for the second node. Of course you'll need to change the IP addresses from those listed above. The configuration to add to the /etc/my.cnf file for the second node looks like:

[mariadb]

wsrep_cluster_address=gcomm://192.168.0.1

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_sst_auth=root:password

wsrep_node_address=192.168.0.2

log-error=/var/log/mysql.log

All we have done is changes the node it is to connect to, and the IP address of the local host.

The mysql instance started from the command line for the second node can then be killed and restarted as a service. It will then rejoin the first node.

Restarting the cluster

As long as one node is up, the cluster will remain active, and the node that is down will have something to connect to when it starts up. But what happens when all nodes go down?At that point you'll have to restart the cluster on the node that has the version of the database you want to use. So if node 1 went down, and then a day later node 2 went down, it is most likely that node 2 will have the copy of the database you want to keep, and so node 2 will be used to restart the cluster.

Restarting the cluster is best done from the command line. Since all our settings are now stored in the /etc/my.cnf file, restarting the node as the initial node in the cluster can be done with the following command:

mysqld --wsrep_cluster_address=gcomm:// --user=mysql

Just like before, an empty address in the wsrep_cluster_address option defines this node as the initial node in the cluster.

The node one can then be started as a service, and it will connect to node 2, and the cluster will be running once again.

You probably don't want to have a terminal window open with node 2 running, so once node 1 is running you can kill node 2, and restart it as a service. Node 2 will connect to node 1, and the cluster is back in action.

Troubleshooting

Name Resolution

If the node trying to connect to an establish cluster is reporting the error:

130307 15:14:52 [Warning] IP address '192.168.0.1' could not be resolved: Name or service not known

And the node it is trying to connect to is displaying the error:

'wsrep_sst_mysqldump --user 'root' --password 'password' --host '192.168.0.2' --port '3306' --local-port '3306' --socket '/var/lib/mysql/mysql.sock' --gtid '4c754641-e45a-11e2-0800-425dfc14f8f4:390'' failed: 1 (Operation not permitted)

The try adding the following option to the nodes configuration files:

skip-name-resolve

Be aware that by doing this, MariaDB will no longer use credentials with host names. That means you'll have to configure the password on root@127.0.0.1 instead of root@localhost.

WSREP: gcs/src/gcs_group.c:gcs_group_handle_join_msg():719: Will never receive state. Need to abort.

And the node it is trying to connect to is displaying the error:

[ERROR] WSREP: Try 1/3: 'wsrep_sst_mysqldump --user 'root' --password 'password' --host '192.168.0.1' --port '3306' --local-port '3306' --socket '/var/lib/mysql/mysql.sock' --gtid '4c754641-e45a-11e2-0800-425dfc14f8f4:420'' failed: 1 (Operation not permitted)

ERROR 1045 (28000): Access denied for user 'root'@'192.168.0.2' (using password: YES)

Make sure the account on the node trying to connect to the cluster is correct. The established node that is being connected to is trying to send the database state to the connecting node, and is failing to do so because of an authentication error.

rsync: failed to connect to 192.168.0.2: No route to host (113)

It means that the default state transfer method is Rsync, not mysqldump. To use mysqldump, add the setting

wsrep_sst_method=mysqldump

Or you can open firewall port 4444.

sh: wsrep_sst_rsync_wan: command not found

in the log files of the node trying to connect to a cluster, run

cd /usr/bin

ln -s wsrep_sst_rsync wsrep_sst_rsync_wan

SELECT table_schema,

sum(data_length) / 1024 / 1024 "data",

sum(index_length) / 1024 / 1024 "index",

sum( data_length + index_length ) / 1024 / 1024 "total"

FROM information_schema.TABLES

GROUP BY table_schema;

This value includes all the indexes, and represents the amount of data MariaDB will transfer to an empty node when it joins the cluster.

To save yourself some time, initialise the nodes that will join the cluster by dumping the SQL data into a file with the command:

mysqldump -u root -ppassword --all-databases > all-database.sql

And then restoring it on the node to be connected with

mysql -u root -ppassword < all-database.sql

Because the backup will not contain the indexes, and can be compressed, you'll find that you'll be able to get a remote node up to a relatively recent initial state far more quickly than attempting to sync the entire database through the MariaDB state transfer. In my case a 5GB database could be backed up into a 600 MB compressed SQL file.

It doesn't matter if the database changes between when you backed it up and when the node connects, because the state transfer will take care of that for you.

wsrep_sst_method=rsync_wan

to save some bandwidth for state transfers over a WAN connection.

Be aware that by doing this, MariaDB will no longer use credentials with host names. That means you'll have to configure the password on root@127.0.0.1 instead of root@localhost.

Credentials

If the node trying to connect to an establish cluster is reporting the error:WSREP: gcs/src/gcs_group.c:gcs_group_handle_join_msg():719: Will never receive state. Need to abort.

And the node it is trying to connect to is displaying the error:

[ERROR] WSREP: Try 1/3: 'wsrep_sst_mysqldump --user 'root' --password 'password' --host '192.168.0.1' --port '3306' --local-port '3306' --socket '/var/lib/mysql/mysql.sock' --gtid '4c754641-e45a-11e2-0800-425dfc14f8f4:420'' failed: 1 (Operation not permitted)

ERROR 1045 (28000): Access denied for user 'root'@'192.168.0.2' (using password: YES)

Make sure the account on the node trying to connect to the cluster is correct. The established node that is being connected to is trying to send the database state to the connecting node, and is failing to do so because of an authentication error.

Rsync

If you see the error on the established node ofrsync: failed to connect to 192.168.0.2: No route to host (113)

It means that the default state transfer method is Rsync, not mysqldump. To use mysqldump, add the setting

wsrep_sst_method=mysqldump

Or you can open firewall port 4444.

Rsync Wan

If you see the errorsh: wsrep_sst_rsync_wan: command not found

in the log files of the node trying to connect to a cluster, run

cd /usr/bin

ln -s wsrep_sst_rsync wsrep_sst_rsync_wan

File Access

If you get the error File '/var/lib/mysql/aria_log_control' not found make sure that the file is owned my the user mysql. I ran into this issue running mysql_install_db as root, which meant that the file was owned by root.

Tips and Tricks

Initial Setup

You can find out how large your SQL database is with the command:SELECT table_schema,

sum(data_length) / 1024 / 1024 "data",

sum(index_length) / 1024 / 1024 "index",

sum( data_length + index_length ) / 1024 / 1024 "total"

FROM information_schema.TABLES

GROUP BY table_schema;

This value includes all the indexes, and represents the amount of data MariaDB will transfer to an empty node when it joins the cluster.

To save yourself some time, initialise the nodes that will join the cluster by dumping the SQL data into a file with the command:

mysqldump -u root -ppassword --all-databases > all-database.sql

And then restoring it on the node to be connected with

mysql -u root -ppassword < all-database.sql

Because the backup will not contain the indexes, and can be compressed, you'll find that you'll be able to get a remote node up to a relatively recent initial state far more quickly than attempting to sync the entire database through the MariaDB state transfer. In my case a 5GB database could be backed up into a 600 MB compressed SQL file.

It doesn't matter if the database changes between when you backed it up and when the node connects, because the state transfer will take care of that for you.

Save Bandwidth

Use the settingwsrep_sst_method=rsync_wan

to save some bandwidth for state transfers over a WAN connection.

Comments

This helped me get a cluster up and running on Ubuntu.

3 comments to make

1) needed the galera package on ubuntu to provide libgalera_smm.so,

2) needed to do the grant all privileges on all nodes in the cluster, and

3) needed to make sure that the mariadb was binding to physical network addresses rather than localhost

Digital Marketing Course In Hyderabad